What's More Accurate a Microphone or our Ears?

Which is more accurate, our ears or a microphone? Most would surely answer a microphone. This is a scientific measurement device capable of providing objective numeric values of sound in a reliable manner. Yet the question shouldn’t be answered so simply. In fact, when I talked with an acoustical physicist friend about this question, his first response was that it was an ill-conceived question. In fact, when reviewing this article, Dr. Floyd Toole noted:

Technical measurements are demonstrably precise, repeatable events. Hearing perception is not. Obviously, the perceived event is definitive – if it does not sound good, it isn’t good. The task is to correlate what we measure with what we perceive – This is psychoacoustics.

He goes on to note that in his book1, extensive evidence is provided for a strong correlation between the right set of measurements and perceived sound quality in a room. The key to this point is Toole’s note of specific sets of technical measurements taken under specific conditions which correlate with our perception of good sound in a room. That is not the same as saying that any measurements taken in a room can be equated to good sound. In fact, I believe that is not true and the kinds of measurements taken by most of us in our rooms have little relationship to the set of technical measurements noted by Toole. The work to get at perception must rely inherently on unbiased and robust methods of subjective inquiry, the double-blind test. In other words, Toole is right, but we cannot confuse this rigorous scientific work with that of consumer level room measurements. There is a vast difference between the notion that speakers should be designed based on rigorous technical measurements rather than inherently biased subjective listening and the notion that we should distrust our own perception and enjoyment of our systems in a room relative to the measurements we can take ourselves.

When we talk about accuracy, we need to first decide what we mean by accuracy. Accuracy is always relative, and in this case, we need to decide what it is we are doing and under what circumstances we seek accuracy. This article will focus on the notion that accuracy is in reference to assessing what we hear. Two specific cases include setting up a home theater and 2-channel system or assessing a room’s acoustic quality. We take this stance because ultimately a sound reproduction system serves the primary purpose of providing entertainment. Its goal is to accurately reproduce the sound encoded on some disc or in some file for us to hear and enjoy. Where this notion goes awry is when we conflate the notion of accuracy with scientific rigor. A scientifically rigorous assessment of the frequency response of a speaker in a room is not the same as knowing if the speaker sounds good in that room and a microphone cannot provide us with that answer.

To fully understand this, we need to know a few important key concepts:

- what is human hearing perception and how does it work?

- how does a microphone and associated software measure sound?

- how is sound reproduced in a room?

We will then discuss the implications of these three concepts in order to understand what is the most accurate manner in which to “measure” sound quality: through a microphone or with our ears.

Accuracy?

In trying to establish which is more accurate, our ears or a measurement microphone, we first need to consider what we are describing as a point of reference. Accurate compared to what? In fact, I bet most of the readers had a knee-jerk reaction to assume accuracy means the precise measurement device we call a microphone. This line of thinking is based on a false premise. We can’t really compare our hearing to that of a microphone, nor is it fair to call one more accurate than the other and this is because of the issue defining accuracy; accurate is what we hear, and nothing is a better representation of our aural reality than our own hearing. To understand this, let’s start with some definitions.

Accurate: (Oxford Dictionary Definition)

- (of information, measurements, statistics, etc.) correct in all details; exact.

- (Of an instrument or method) capable of giving accurate information.

- Faithfully or fairly representing the truth about someone or something.

From this definition, we can see why most assumed the microphone would be a more accurate device than human hearing. If our definition is “correct in all details or exact” that could be represented by a scientific measurement device, something like a microphone. Yet the question that we posed at the outset wasn’t which device granted a better representation of actual reality; simply which is more accurate. Since we don’t play back music and movies simply to measure the faithfulness of the reproduction against the source, but rather for our own enjoyment, the human experience becomes central to our definition of accuracy. In this case, a better definition to use is the last definition, the faithfully or fairly representing of the truth, in this case, of the original content. It isn’t the measurement of that faithfulness that matters (something that, as I will explain, is exceedingly difficult to do), but our own perception of its faithfulness. It should sound correct to us as the listener. Now that we have established that accuracy is our own perception of the faithfulness of the reproduction, we need to think about how we perceive this and where the errors may come into play.

Human Hearing

While all aspects of human perception are something to marvel at, human hearing is, for me, truly astonishing. Human hearing can do things that we have been completely unable to recreate or better through computer automation. Our ears are themselves an astonishing sensory organ, and their connection to what amounts to a supercomputer allows for certain aural skills that, when laid on paper, seem supernatural. Here we are going to attempt to briefly describe how the human hearing system operates and give examples of just a few small feats that this system is capable of.

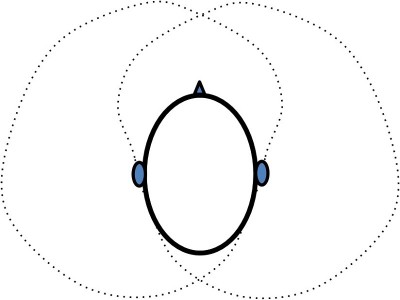

Most people are well aware that our ears are how we hear, they understand that we have this flesh blob attached to the side of our head with an opening, and that deep inside of that opening is something called an eardrum and that this somehow detects sound that our brain processes for us as hearing. What most people do not realize is just how complex this process really is, and the role that the major organs play. For example, that flesh blob known as the Pinna plays a major role in our ability to hear where sounds are coming from. The shape of our ears and the Pinna itself provide a forward bias to our hearing that makes detection of sound directional. Just like a speaker or microphone has a polar pattern (frequency response shape variation with angle), so do our ears2 (Fig 1). It also creates a particular modification to the response of sounds depending on the angle they enter our ear, which our brain becomes accustomed to and uses in its detection of sound direction. This is known as our Head Related Transfer Function (HRTF).

Fig 1: Hearing polar response at 4kHz

It should be obvious why directional hearing is important, its evolutionary benefit is clear. If we couldn’t hear predators coming, we could not escape them. Surely, we would have been eaten by the many giant ground sloths that roamed the earth during early human evolution. Further, we would be unable to successfully hunt prey. While many prey animals have developed successful methods of camouflage, few have found ways to successfully hide the sound of their movement. Hearing is critical to tracking.

In talking about directional hearing, however, I’ve really only talked about how the shape of the ear impacts our ability to detect direction, but without two ears, what is known as binaural hearing, there is little we could do to detect sound source azimuth, or the left to right direction. We do have two ears and they detect sound independently (and our brain processes those signals independently, comparing the differences to place a sound object on our aural soundstage). Azimuth is largely a result of two functions of binaural hearing. The first is Interaural Level Differences (ILD), that is the sound closer to one ear will be louder in that ear. This should make intuitive sense to us: as objects get closer, they get louder. Our brain has evolved to learn that objects that change in loudness are moving closer or farther away, and thus, objects that are louder to one side of us are usually closer to that side. That works great at high frequencies, but not so well at low frequencies. That is because as the soundwaves become larger (which they do at lower frequencies), they begin to do something funny. They diffract or wrap around our head. That means that the level difference between our left and right ear is no longer significant. Our brain cannot detect direction by level in such a scenario. This is where a different mechanism takes over: Interaural time difference (ITD). This phenomena in our hearing is fascinating. As many of us know, a time difference causes a phase change. Even sound that wraps around our head takes longer to reach one ear vs the other, and as such, there will be a phase difference between the sound in one ear vs the other. Our brain can process this phase and time change and through that, detect the azimuth of a sound source at lower frequencies.

Editorial Note: Our Brain Uses of ITD and ILD

There is not a hard separation between our brain’s use of ITD or ILD. Instead, there is a gradual shift from ILD to ITD, with a period of overlap in which both ITD and ILD are used simultaneously. Below around 500hz, we primarily use ITD to evaluate sound direction. Above around 1500Hz we mainly use ILD to evaluate sound direction. Between these two frequencies, both mechanisms are equally at work for sustained sounds, however transient sound events are handled differently. For transient sound events the ITD of the envelope of the transient is powerful localization information. It is such transient events that allow us to localize sounds in complex acoustical environments – e.g. a live concert hall experience. Once a direction is perceived using transient information, a kind of “flywheel” effect sets in and the sound source continues to be perceived in that location in spite of the absence of reliable acoustical cues. Those numbers are not hard and fast but rough estimates. Neither ITD nor ILD work particularly well in the transition zone, which is partly why we use them together. While it has been shown that our hearing is sensitive to as little as a 10 microsecond3 or a shift in as little as 1 degree in the azimuth in front of our heads (it is significantly worse behind us), we also use our vision to help place objects. Our brain then combines information from our hearing and vision to precisely detect an object. This is especially helpful since our hearing is not as precise in the vertical dimension.

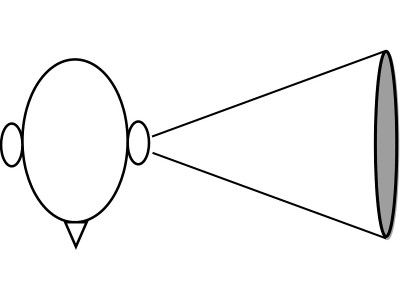

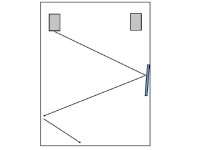

As noted earlier, the outside of the ear, the Pinna, plays a role in our dire ctional hearing. In fact, when combined with the shape and construction of our head overall and our Pinna specifically, Head Related Transfer Function (HRTF) is created. HRTF’s are interesting; they are like fingerprints. Because all of us have different shaped heads and ears, we all have a unique HRTF. Sure, they all share a lot in common, far more in common than is different, but still, no two are the same. What is the HRTF? This refers to the specific way in which the response of sound reaching the eardrums from specific angles is modified by the external ear, head, and shoulders from its actual response. Sound is attenuated and boosted at certain frequencies based on our HRTF and amazingly, our brain actually learns what our HRTF is and takes advantage of this. The key that I missed when I first learned about the concept of an HRTF is that the transfer function is source position dependent. The way the response is transformed depends on the location of the sound source. Because the brain knows this and knows the difference, it can locate the position of the sound based on how the response changes. What is the HRTF for? Primarily it is used to address a limitation with ITD and ILD known as the cone of confusion (Fig 2). This is a region around the medial axis of our body in which ITD and ILD are identical (i.e. to the left and right hemisphere of our body). As such the brain can’t detect the direction of the sound very accurately. By using the HRTF, the brain more accurately detects the direction of a sound source.

ctional hearing. In fact, when combined with the shape and construction of our head overall and our Pinna specifically, Head Related Transfer Function (HRTF) is created. HRTF’s are interesting; they are like fingerprints. Because all of us have different shaped heads and ears, we all have a unique HRTF. Sure, they all share a lot in common, far more in common than is different, but still, no two are the same. What is the HRTF? This refers to the specific way in which the response of sound reaching the eardrums from specific angles is modified by the external ear, head, and shoulders from its actual response. Sound is attenuated and boosted at certain frequencies based on our HRTF and amazingly, our brain actually learns what our HRTF is and takes advantage of this. The key that I missed when I first learned about the concept of an HRTF is that the transfer function is source position dependent. The way the response is transformed depends on the location of the sound source. Because the brain knows this and knows the difference, it can locate the position of the sound based on how the response changes. What is the HRTF for? Primarily it is used to address a limitation with ITD and ILD known as the cone of confusion (Fig 2). This is a region around the medial axis of our body in which ITD and ILD are identical (i.e. to the left and right hemisphere of our body). As such the brain can’t detect the direction of the sound very accurately. By using the HRTF, the brain more accurately detects the direction of a sound source.

Fig 2: Cone of Confusion

Everything we’ve explained so far largely deals with outer ears and the sophisticated processing our brain does with hearing. What we haven’t addressed is what happens inside the ear. For the purpose of this article, a very brief discussion is all we need. Inside the ear we have an eardrum, this is a thin membrane that acts much like a drum head. It vibrates sympathetically with the sound vibrations that enter the ear. Those vibrations transfer to the cochlea, a small organ inside our ear with a snail-like labyrinth shape that contains very special hair cells connected to nerves. The basilar membrane is a pseudo-resonant structure whose plane varies in size and thickness to change the frequency at which each part is sensitive to. This is the first aspect of how our hearing discerns pitch. Additionally, it acts as a base for the more than 15,000 hair cells which then funnel these signals to further enhance our pitch perception. We perceive tone as a result of the interaction between the resonances of the basilar membrane and the connected hair cells funneling signals to the brain. Of course, there is much more to this organ and even the mechanisms of human hearing than have been discussed so far, but such discussion is unimportant for the thesis of this article.

Now that we have a good basic understanding of how our hearing works and especially the sophisticated way in which we perceive direction, we should then think about how this relates to our faithful perception of the musical or movie event.

The Real Event?

Describing the faithfu l reproduction of the real event as encoded on the media is a far more complex topic than it may seem. Before we recorded music in small studios on multi-track recorders, the recording of a piece of music was, in fact, a real event. The musicians played together the entire song from start to stop. If they made a mistake it might be forever encoded on the media for all to hear. In fact, this reality is part of what I love about live recordings, especially for improvised pieces such as Jazz and Blues. This was true of movies as well, the soundstage often referred to the recording of the actors interacting as we saw them on stage. The effects were reproduced live during the show rather than added in later. Once multi-track recording came into existence it was no longer necessary to record the real live event and the music or movie event we hear became a produced event - an event that never existed in real life, but rather, in the mind of the sound engineer. This then begs the question, what is an accurate or faithful reproduction of an event that never existed in real life? I would argue that the sound engineer (and likely the producer, director, or artist) had an aural experience, an event, in their mind. Faithful would be to what they intended, and unfortunately, with the state of both sound reproduction and sound engineering, I don’t know that the envisioned event is always obvious. What’s encoded on the disc may not be what the artist, director, or engineer actually intended, and it may simply be what they heard on their system, with all its errors intact. This is what Toole refers to as the “circle of confusion” and he does a far better job discussing this issue in his book.

l reproduction of the real event as encoded on the media is a far more complex topic than it may seem. Before we recorded music in small studios on multi-track recorders, the recording of a piece of music was, in fact, a real event. The musicians played together the entire song from start to stop. If they made a mistake it might be forever encoded on the media for all to hear. In fact, this reality is part of what I love about live recordings, especially for improvised pieces such as Jazz and Blues. This was true of movies as well, the soundstage often referred to the recording of the actors interacting as we saw them on stage. The effects were reproduced live during the show rather than added in later. Once multi-track recording came into existence it was no longer necessary to record the real live event and the music or movie event we hear became a produced event - an event that never existed in real life, but rather, in the mind of the sound engineer. This then begs the question, what is an accurate or faithful reproduction of an event that never existed in real life? I would argue that the sound engineer (and likely the producer, director, or artist) had an aural experience, an event, in their mind. Faithful would be to what they intended, and unfortunately, with the state of both sound reproduction and sound engineering, I don’t know that the envisioned event is always obvious. What’s encoded on the disc may not be what the artist, director, or engineer actually intended, and it may simply be what they heard on their system, with all its errors intact. This is what Toole refers to as the “circle of confusion” and he does a far better job discussing this issue in his book.

"This is the basis of what I call the “circle of confusion” – elaborated on in my book. The final “performance” is what is heard in a mixing and/or mastering room, through the loudspeaker of unknown pedigree. The book shows examples of the horrible loudspeakers widely used in the recording industry. Film sound is a separate and different nightmare to which a separate chapter is devoted (Chaper 11). Playback conditions are countless."

- Dr. Floyd Toole

I mention this to make the point that a sound reproduction system with an objectively ideal response does not guarantee a faithful reproduction of the original event. Our ears, on the other hand, may tell us what the microphone did not. This goes back to our sophisticated brain processing sound. We learn in time what something is supposed to sound like, this is partly how we know a given recording doesn’t sound real, even if we have never heard the real event as intended at inception. We memorize what certain instruments sound like, what kind of tonal balance seems natural, where instruments or sound sources are typically placed within a soundscape. For example, it might sound odd to us to hear the singer of a rock band coming from above us, that isn’t a natural place for the singer. In fact, the movie “Gravity” plays into this concept to create a certain illusion. George Clooney’s character is often heard coming from above us, his exact placement is indistinct, giving the effect of hearing him through headphones. That would make sense if we heard him over our headphones in a space suit. It also would make sense if we were just imagining Clooney. This begs the question; how do we perceive this event? What mechanisms are at play?

with an objectively ideal response does not guarantee a faithful reproduction of the original event. Our ears, on the other hand, may tell us what the microphone did not. This goes back to our sophisticated brain processing sound. We learn in time what something is supposed to sound like, this is partly how we know a given recording doesn’t sound real, even if we have never heard the real event as intended at inception. We memorize what certain instruments sound like, what kind of tonal balance seems natural, where instruments or sound sources are typically placed within a soundscape. For example, it might sound odd to us to hear the singer of a rock band coming from above us, that isn’t a natural place for the singer. In fact, the movie “Gravity” plays into this concept to create a certain illusion. George Clooney’s character is often heard coming from above us, his exact placement is indistinct, giving the effect of hearing him through headphones. That would make sense if we heard him over our headphones in a space suit. It also would make sense if we were just imagining Clooney. This begs the question; how do we perceive this event? What mechanisms are at play?

In fact, we have already addressed the question posed at the end of the last paragraph. Our sophisticated means of hearing tones and recognizing direction combine to create our perception of aural reality. The real event mentioned in the last paragraph is an edifice of reality; it isn’t real, yet from an aural standpoint, it seems like our brain is easily fooled into believing such. Modern sound engineering plays into our knowledge of how we perceive sound and sound direction.

Be it for music or movies, we can now place instruments, voices, or effects in precise locations that fool our brain into thinking they are  coming from the real event. Toole noted, in reviewing this article, that:

coming from the real event. Toole noted, in reviewing this article, that:

“the default format for music is two-channel stereo, a directionally and spatially deprived medium incapable of replicating reality. Dumb!”

I agree, we have known of this problem for a long time, the superiority of a multi-channel reproduction system is well established, yet it has simply never caught on with music the way it should have. It's really quite remarkable what we can achieve, especially with multi-channel systems, allowing us to create a believable soundscape that largely matches the reality around us. Think about it for a moment: we can turn a small living room into Middle Earth and be truly fooled into believing we are there. Ok, so I’ve discussed our hearing, our perception of the real event, and how our hearing perceives this as a faithful reproduction of said event. Then where does that leave the lowly microphone?

The Lowly Measurement Microphone

As for the lowly measurement microphone, it is a relatively simple device to explain. A microphone works much like a dynamic speaker driver in reverse. There is a diaphragm, and the diaphragm is a lot like a speaker cone. This diaphragm vibrates sympathetically to the sound vibrations, and these vibrations are translated into an electrical charge. Some basic microphones, known as dynamic type, use a voice coil and magnet. A condenser microphone is essentially a capacitor in which one of the plates is the diaphragm. Vibrations in the diaphragm cause a movement that changes the capacitance of the condenser module and this causes fluctuations in the response. Through a simple filter, the output can then be translated as sound. Generally speaking, measurement microphones are of the electret condenser type, and the more affordable microphones most commonly used by consumers tend to use either a Panasonic made element or a clone/derivative of it, known as the WM61a. This is a polymer diaphragm back electret condenser microphone with a flat response, low noise, and omnidirectional response. It’s also very inexpensive. A variation of this capsule is used in everything from the Dayton Audio UMM-6 to the Earthworks M30. The primary difference between the mics is the quality of the preamplifier circuit built into the microphone and the capsule itself. For example, Earthworks uses real high-end elements, preconditions and ages them, and tests each one to ensure only the best capsules are used. Their preamplifier circuit is top notch and the response of the element is flattened in the mic itself. Cheaper measurement mics use clones of this capsule that are not as good quality, also less sorting, aging, or conditioning takes place. As for how these measurement microphones compare to professional models from companies like Bruel and Kjaer, the main difference is that the pre-polarized capsules are made with less precision and use a polymer diaphragm that varies with age, temperature, and humidity. Professional lab grade microphones typically use metal diaphragms, are made by hand, and are carefully characterized to ensure quality. These microphones are orders of magnitude lower in noise, and in some cases have a much wider dynamic range. The noise floor of a typical consumer grade microphone is between 25dB and 30dB, adequate for basic room measurements, but inappropriate for professional measurements. As noted earlier, the equivalent noise floor of our ear is 0dB, that is the lowest sound that our ear can hear (and is why 0dB was chosen, it’s an anchor point).

The last paragraph discussed the basic means by which a microphone works and the limitations of consumer-grade measurement microphones. However, to make a comparison to human hearing, we need to then think about how hearing really works and parallel that with our measurements. An integral part of hearing is our brain, which processes the incoming signals in order for us to make sense of them. We do the exact same thing with measurements, though the computers and programs we use are not nearly as sophisticated as the brain. One of the most common approaches used for processing measurements into sensible graphics is the Fast Fourier Transform (FFT). This method is used to calculate both the amplitude and phase of each frequency for both the test signal and room measurement. The difference between the two represents the room’s transfer function, which is essentially how the room and speaker have modified the original test signal. In very simple terms, the FFT places the detected sound energy into very small bins and the bins become smaller at higher frequencies. How small depends on the FFT length. This allows you to process the signal in very narrow bands, which improves the accuracy of the amplitude and time analysis as well as allows you to lower the noise. There exist other methods of analyzing the recorded signal such as Maximum Length Sequence (MLS) which can take the cross-correlation of the MLS test signal with that of the test recording, which then outputs the transfer function of the system.

For the purposes of our discussion, the exact mathematical function used to analyze the data is less important than understanding what data you get. Namely, these approaches give us the amplitude and time domain transfer function of the measured system. The measurement itself is a single point measurement from an omnidirectional microphone. The amplitude response, or frequency response of a system, tells us how much a system’s tonal response (the relative volume of each tone to the mean) varies. A substantial change in tone over a particular bandwidth impacts how a system sounds to us. For example, if we saw that a system had a boost in the response between 80Hz and 300Hz of just 3dB as compared to the amplitude at 1kHz, this might cause male voices to sound “chesty.” That is, the male voices would not sound natural, the lower register of their voice would be too prominent as if they were speaking with a falsely deep voice. The act of artificially lowering our voice is done by enhancing the resonance in our “chest” and hence the term chesty.

Elephant in the Room - which is better?

Having talked about measurement microphones, let’s address the elephant I’ve let into the room. The article set out to discuss the accuracy of our hearing vs that of a microphone to decide once and for all which is more accurate. I made the point that we can’t really discuss accuracy without creating a point of reference, and my point of reference is the faithful reproduction of the real event (even if that “real” event is an artificial creation). It becomes clear then that our hearing is really all that matters. We could walk away now without ever addressing the lowly measurement microphone, but that provides a problem. How could I justify my own frequent use of a microphone for optimizing a sound reproduction system, along with that of most sound engineers, acoustical scientists, and technicians? Clearly, a microphone must provide some kind of accuracy for which our ears cannot?

Having talked about measurement microphones, let’s address the elephant I’ve let into the room. The article set out to discuss the accuracy of our hearing vs that of a microphone to decide once and for all which is more accurate. I made the point that we can’t really discuss accuracy without creating a point of reference, and my point of reference is the faithful reproduction of the real event (even if that “real” event is an artificial creation). It becomes clear then that our hearing is really all that matters. We could walk away now without ever addressing the lowly measurement microphone, but that provides a problem. How could I justify my own frequent use of a microphone for optimizing a sound reproduction system, along with that of most sound engineers, acoustical scientists, and technicians? Clearly, a microphone must provide some kind of accuracy for which our ears cannot?

Answering this question really begs another question, why do we measure at all? Audioholics has previously written a great article on exactly this subject - Why we measure audio. First and foremost, scientists and engineers use measurements as a means to objectively characterize a speaker. When it comes to engineering a speaker, this is a valuable tool. However, without a point of reference, even that would be of little use. We need to know how those measurements ultimately correlate with what we perceive. This requires careful research to correlate perceptions of good sound and the measurements of a system (and keep in mind, it is not just the basic amplitude response that matters here, and certainly not the in-room measurements). My own reliance on measurements is largely for optimizing the bass response of a system, setting EQ, and setting levels/distance of surround system speakers. My point of reference? My ears, what sounds good to me.

What about the Room’s Acoustics - isn’t that a reason to measure?

“Surely we can’t use our ears to assess a room’s acoustic properties, so measurements must be a great way to objectively characterize and improve the room”. I often get this question, and while this is true to a point, our ears do a fine job assessing a room’s acoustics. Measurements are a great way to assess a room’s acoustics, but the main problem we have is that most of the standards that exist for room acoustics have no relation to our perception of good sound in a small room. What value is there in assessing RT60 if the very concept was derived from much larger acoustic spaces. In fact, in small rooms, what most people will find is that the decay time of the room is relatively short, short enough that it’s not a great concern. Most small spaces naturally have too much mid and high-frequency absorption and not enough low-frequency absorption. You don’t need measurements to know that, because it is nearly universally true. Yes, measurements can be helpful in assessing a room’s acoustics, but the reality is most people aren’t able to make much sense of these measurements, and the changes their acoustic treatments make are often unmeasurable using commonly practiced methods.

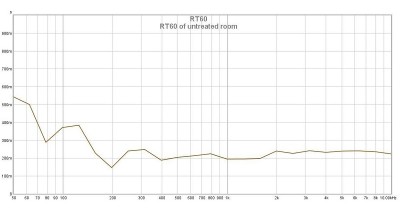

In general, our ears do a better job assessing a room’s “sound” than does a microphone. The reason for this is clear. Our ears are a very sophisticated tool for perceiving sound and is capable of detecting very small changes in phase, tone, or direction. When we reduce a reflection in a room, our ears can detect this. Our measurements would struggle to notice. Small changes in reflections, especially at high frequencies, would not have a material effect on the steady-state response. That means that the adding of a small amount of absorption to a wall doesn’t really change our measurements much. In small rooms, the decay of sound at mid and high frequencies is so rapid that we would further struggle to see it in time domain plots such as waterfall graphs. We might see a reduction in RT60 measures (or other related measures of early decay) but who is to say that lower is better. Most small acoustic spaces naturally have an RT60 time of around .5 seconds. That is a good value with a natural acoustic sound, so why go lower? Many people find very low decay times to sound unnatural. It might work well for a movie where the ambiance is artificially added through the surround speakers, but it may make music sound unnatural, overly dry, and artificial. More importantly, measurements aren’t needed to know this. Our ears will tell us this very quickly. As a point of reference, I’ve included the RT60 measurement of a completely untreated basement room with a false ceiling, bare walls, a cement floor with no carpet or rugs (Fig 3). That is a very low and very flat RT60 because the room is fairly small with low ceilings of less than 8 feet, the ceiling is highly absorptive, and the soft furniture in the room are all contributing to too much absorption.

Fig 3: RT60 of untreated basement listening room with a false ceiling, cement floor, and bare walls

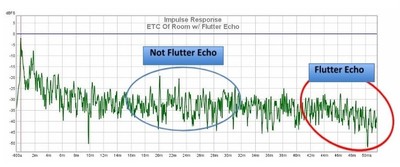

I hear people sometimes talk about flutte r echo, specifically the need to measure a room in order to address it. This too is a nonsensical view on the value of measurements as most people simply can’t measure flutter echo easily. Flutter echo is a relatively short wavelength reflection that takes place between two parallel walls and is caused by a very specifically placed sound source to excite this reflection. It is best imagined as a ball that bounces back and forth between two walls until it runs out of energy and falls to the ground. That ball could only bounce like that if thrown from a particular location and with a particular angle. The same is true of flutter echo, so the likelihood that you would place a source just so, a mic just so, and be able to capture it (and recognize it for what it is) is very low. Even if you could, flutter echo is easily heard with our ears. We could walk around a room clapping our hands until we hear it and we would likely detect it much more quickly than any measurement rig could do. Its source is obvious, our hands clapping (and only a source in the location of our hands would cause that specific echo), the parallel surfaces is very likely the parallel walls, possibly the floor and ceiling. The fix couldn’t be simpler: don’t put your speakers there.

r echo, specifically the need to measure a room in order to address it. This too is a nonsensical view on the value of measurements as most people simply can’t measure flutter echo easily. Flutter echo is a relatively short wavelength reflection that takes place between two parallel walls and is caused by a very specifically placed sound source to excite this reflection. It is best imagined as a ball that bounces back and forth between two walls until it runs out of energy and falls to the ground. That ball could only bounce like that if thrown from a particular location and with a particular angle. The same is true of flutter echo, so the likelihood that you would place a source just so, a mic just so, and be able to capture it (and recognize it for what it is) is very low. Even if you could, flutter echo is easily heard with our ears. We could walk around a room clapping our hands until we hear it and we would likely detect it much more quickly than any measurement rig could do. Its source is obvious, our hands clapping (and only a source in the location of our hands would cause that specific echo), the parallel surfaces is very likely the parallel walls, possibly the floor and ceiling. The fix couldn’t be simpler: don’t put your speakers there.

Dr. Floyd Toole, makes a point that acousticians who walk around clapping  their hands looking for flutter echo and then trying to sell you on a full acoustic package to rid yourself of the problem are likely charlatans. He’s right - the only flutter echo that matters is one caused by your speakers in their final location that is audible at your listening position. It isn’t very common that this kind of placement and distance would cause a flutter echo, and even if it does, it’s often remedied cheaply and easily by the simple placement of some pictures on the walls (They “roughen” the wall surface so it isn’t perfectly parallel), carpet on the floor, slight movement of the speakers or your listening position. None of this is very complex to execute, and none of it requires measurements. I’ve included a measurement of a listening room that has been treated but shows slight signs of flutter echo (Fig 4). I’ve noted how it can become a bit difficult to spot flutter echo in this Energy Time Curve (seen as spikes as regular occurring intervals and typically at a time delay that is great relative to the initial impulse). Further, it is worth noting that nobody who I have invited into this listening room has ever noticed the flutter echo, even after I point it out to them (and I will admit that I have never found the flutter echo to be noticeable with any actual music).

their hands looking for flutter echo and then trying to sell you on a full acoustic package to rid yourself of the problem are likely charlatans. He’s right - the only flutter echo that matters is one caused by your speakers in their final location that is audible at your listening position. It isn’t very common that this kind of placement and distance would cause a flutter echo, and even if it does, it’s often remedied cheaply and easily by the simple placement of some pictures on the walls (They “roughen” the wall surface so it isn’t perfectly parallel), carpet on the floor, slight movement of the speakers or your listening position. None of this is very complex to execute, and none of it requires measurements. I’ve included a measurement of a listening room that has been treated but shows slight signs of flutter echo (Fig 4). I’ve noted how it can become a bit difficult to spot flutter echo in this Energy Time Curve (seen as spikes as regular occurring intervals and typically at a time delay that is great relative to the initial impulse). Further, it is worth noting that nobody who I have invited into this listening room has ever noticed the flutter echo, even after I point it out to them (and I will admit that I have never found the flutter echo to be noticeable with any actual music).

Fig 4: Example of Flutter Echo in Measurement

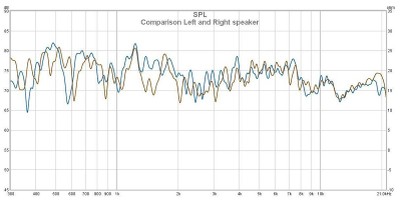

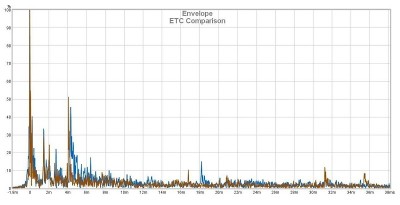

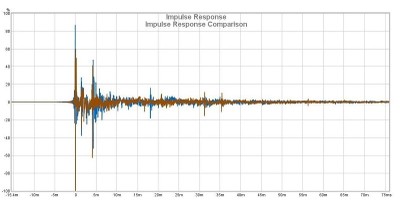

I’ll relay a final story of my own experience working with a client on his listening room in which my own ears proved a more valuable tool than my measurement equipment. While taking measurements of his space, I noted a piano sitting directly adjacent to his right speaker. I thought to myself “I wonder if that piano resonates with the music, and if so, if it is audible”. While listening to music I had thought I heard a number of strange things, and I had a hard time pinning them down. When I took left and right channel measurements at different locations, the test tones sounded odd, especially near the right speaker. Yet the measurements looked completely normal (Fig 5, 6, 7). Part way through the measurement process I paused to think about how a piano resonance would present itself, to think about what it is I’m hearing, and look for that. I positioned myself closer to the piano as the sweep ran and I could clearly hear what sounded like a piano string (or strings) resonate. The sound was closer to my right ear than my left ear, and it was more prevalent on the right side of my head and at specific frequencies. This gave me clues to look for evidence of the effect in the measurement, but it was nowhere to be found. Ultimately, I suggested the client move his piano farther away from the speakers and listening position to avoid its resonance mixing with the musical signal and corrupting it. Placing the piano in the back of the room would delay the resonance enough that our brain would likely just perceive it as hall ambiance rather than a corruption of the direct signal. Later I studied the more than 100 measurements I took at the client's house. Each one meticulously labeled by measurement location, mic position, and speaker playing. In the end, I was never sure I found evidence of this piano string resonance that my ear so clearly heard. I’ll include a number of comparison graphs for you to examine to see for yourself. The measurements in the graphs were taken at a distance of 1 meter from the speaker, meaning the mic was closer to the offending piano in one measurement than the other. As a point of reference, the piano was roughly 2-3 feet away from the right speaker, placing it (as a sound source) around 1-2 ms away. It was around 10 feet away from the microphone when measuring the left speaker, making it around 8ms away. Since the piano is fairly large, I would argue that anything you find in the 6-12ms range is potentially from the piano, but remember, the same signature needs to show up at 1-4ms in the right speaker. Look for yourself and comment below if you believe you have spotted the offending resonances with certainty.

Fig 5: In-Room Measurement Comparison

Fig 6: ETC Comparison

Fig 7: Impulse Response Comparison

Conclusion

This article started by asking a question that really isn’t a very sensible comparison to make. Which is more accurate, our ears or a microphone? Accurate by what standard? What are we looking for? In the end, it should be obvious that a measurement microphone is an accurate device for measuring a room and speakers and that it serves a specific and useful purpose. It should also be obvious that our ears are very capable and accurate themselves, but both serve a different purpose and operate in a different manner from each other. Where consumer measurement equipment is typically omnidirectional and monophonic, our ears are directional and binaural. This point alone is critically important because it tells us that what we hear isn’t going to directly correlate with what we measure.

This article started by asking a question that really isn’t a very sensible comparison to make. Which is more accurate, our ears or a microphone? Accurate by what standard? What are we looking for? In the end, it should be obvious that a measurement microphone is an accurate device for measuring a room and speakers and that it serves a specific and useful purpose. It should also be obvious that our ears are very capable and accurate themselves, but both serve a different purpose and operate in a different manner from each other. Where consumer measurement equipment is typically omnidirectional and monophonic, our ears are directional and binaural. This point alone is critically important because it tells us that what we hear isn’t going to directly correlate with what we measure.

Since the primary purpose of our music and movie systems is our own entertainment in accurately reproducing the “real” event, ultimately it is our perceptions that become our point of reference. Accuracy is thus defined by our perception of the reproduction of the event, and a microphone can’t tell us that. Sure, the microphone has it’s uses; measuring a room’s response can help integrate and optimize the low-frequency response, at least to a point. While the quality of the low-frequency response is certainly important to our perception of accuracy, it is not all that matters. These measurements will not tell us anything about how the speakers present the soundstage. We will have no clues toward the spaciousness of the soundscape. Capturing that information would require far more sophisticated measurements and a lot more knowledge than the average consumer has access to. In fact, when Dr. Floyd Toole reviewed this article, he summed up the issue with typical consumer room measurements, which use a single Omni-directional microphone and an FFT analyzer as “dumb” relative to human hearing. It lacks the sophisticated signal processing to detect sound and provide us with information that our ears can quickly accommodate. The purpose of this article is not to be damning of measurements, because they have their place, and they can be fun and helpful. However, there is also no denying that there has become an over-reliance on the perceived objectivity of measurements and a diminished reliance on what our own ears tell us about the accuracy of our system. This shift is to the detriment of good sound. Many consumers would be far better served spending time training their ears as to what good sound is. Learning to hear what different room reverberation times sound like, what specific changes in tone sound like, or experiencing the “real” event first hand. How can we know what a trumpet is supposed to sound like if we have never heard one live and unaided by electronic amplification? The key takeaway here should be that a flat in-room response is not a guarantee of good sound; this is not necessarily a desirable trait, and if this is achieved based solely on in-room measurements without regard for many other important factors in good sound reproduction, is more likely to lead to a bad sounding system.

References

1. Toole, F. E. Sound Reproduction, the Acoustics and Psychoacoustics of Loudspeakers and Rooms, Third Edition. Focal Press, 2017.

2. Shaw EAG, Vaillancourt MM. Transformation of sound-pressure level from the free field to the eardrum presented in numerical form. Journal of the Acoustical Society of America,78:1120-1123, 1985

3. R. H. Domnitz and H. S. Colburn. Lateral position and interaural discrimination. Journal of the Acoustical Society of America, 61:1586–1598, 1977