HDMI 1.3 and Cables Part 1: It's All in the Bitrate

Special thanks to Steven Barlow of DVIGear for technical contributions to this article

With the advent of HDMI v1.3 and 1.3a, consumers are starting to really get confused about cables and what they need to worry about when selecting a product that's going to be compatible with the new specifications. We interviewed Steven Barlow from DVIGear to get a handle on why this is a more complex issue for some, and a non-issue for others. He allowed us to assimilate much of what we discussed into this article you are reading now.

Before we get too far, it's important to understand a very significant term that relates to digital signals, "bitrate," and what it means. "Bitrate" is the speed that bits are moving through the cable system, AV receiver, DVD player, whatever. The home theater enthusiast is more concerned with resolutions - and the more advanced consumer electronics pro typically understand and express this through the concepts of resolution and color depth. So a lot of times you'll hear bitrate-centric issues discussed more along the lines of 1080p being "better" than 1080i and 1080p with 8-bit color is less than 1080p having 12-bit (Deep) color.

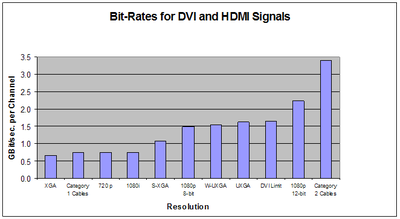

But from a digital engineering standpoint everything is bitrate. And with that, we begin our discussion. With respect to high-definition video quality and resolution, 720p or 1080i is the first "bus stop" at 742.5 Mbit/s. Those resolutions describe 742.5 million bits of information traveling through the digital pipeline each second. A lot of AV receiver chipsets handle these resolutions with no problems at all. ALL HDMI cables that aren't made in someone's garage will pass this level of bitrate for a considerable length.

The next stop on the route to HD bliss was 1080p which, although thought of as the perfect format, this was initially limited to the normal 8-bit color depth, yielding a bitrate of 1.485 Gbit/s (1485 Mbit/s). If you just did that math, this means that 1080p is twice the bitrate of 720p or 1080i. That should be a big deal to people looking to install a longer HDMI cable run in their home theater room. Taking this a step further, if you plan to utilize any PC resolutions that exceed 1080p, you're in for even more cable requirements. 1920x1200/60Hz, for example, has a bitrate of 1.54 Gbit/s and 1600x1200/60Hz requires a bitrate of 1.62 Gbit/s. If you're going to utilize HDMI or DVI over long distances it might be a good idea to know that the manufacturer has had that cable certified to handle your particular bitrate requirements. DVIGear, for example, has spec'd all of its SHR (Super High-Resolution) HDMI and DVI-D cables to handle up to 2.25 Gbit/s (the highest available bitrate for any products on the market today) at up to 7.5 meter lengths (without any EQs or active components.) Their active cables can go up to 40 meters at this bitrate.

Note: You may note that the bitrate may not make sense at first glance since the higher resolution has a slightly lower bitrate. This has to do with blanking time and active time. Active time is when the image is being written on-screen. Blanking time occurs during the time the image lines are skipping over the areas you don’t see (think of a old typewriter during a carriage return.). This all is derived from old CRT scanning where the beam would have to blank in traveling back to the left before picking up the next line. Blanking time and active time equal your total time. The longer the blanking time the shorter the active time, and vice versa. 1600x1200 has longer horizontal blanking time and you have a shorter active time - so the data must be crammed in. 1920x1200 has a larger active time and shorter blanking time and so it can be expressed with a lower bitrate. VESA determines all these standards and timings.

HDMI v1.3 - What Were They Thinking?

Before we get into HDMI v1.3 I believe it is important to note the state of things in the consumer electronics industry prior to the introduction of v1.3. At just 1.485 Gbit/s bitrate for 1080p at 8-bit color, many cable vendors' products fell to pieces after just 5 meters (and believe us, some didn't make it that far.) Most major name cable manufacturers were doing well to label their products, but a lot of imported pieces claimed 1080p compatibility at such ridiculous lengths that it was obvious the companies were not informed. Just as the industry seemed to be catching a hold of this concept and customer installers were properly outfitting their installs with cables that would "stand the test of time" the powers that be over at HDMI Licensing, LLC, a wholly-owned subsidiary of Silicon Image (a principle founder of the HDMI standard), felt concerned about emerging technologies such as DisplayPort from VESA, a competing format that threatened to compete with HDMI. On June 22, 2006 they announced completion of the new spec to the industry to greatly extend HDMI's capabilities - but largely much of the new spec exists only on paper.

HDMI v1.3 can, per the spec, handle up to 3.4 Gbit/s per channel. Now, don't be confused by the inflated numbers used by the marketing people at HDMI.org - they express the bandwidth as 10.2 Gbit/s - which is simply the bandwidth multiplied by the three color channels (RGB). While Silicon Image and HDMI Licensing, LLC said everyone was going to support 3.4 Gbit/s, they didn't exactly provide a lot of "support" for the first 6 months. For a long time there wasn't enough silicon to produce the transmitter and receiver chips needed to implement the new technology so most manufacturers waited until 2007 to produce consumer electronics products with the v1.3 features. As silicon emerged from Silicon Image and a few other manufacturers in 2007, support was included for up to 2.25 Gbit/s - the bitrate associated with 1080p resolution at 12-bit color per channel. This isn't supportive of the maximum theoretical HDMI 1.3 bitrates, but it is certainly more than 8-bit and less than the 16-bit HDMI v1.3 claims to be able to handle.

8-bit, 10-bit, 12-bit… What's the Deal?

Bit depth is what is responsible for the number of colors you can see on a display. Increasing the bit depth of a display product (and matching the source components and cabling to support that display) means that you will incur a much lower chance of experiencing color banding where smooth color gradients cannot be accurately reproduced and break up into bands. To understand how this affects bitrate, let's consider the following:

- 8-bit color = 2^8 x 3 = 2^24 = 16.7 million colors

- 12-bit color = 2^12 x 3 = 2^36 = 68.7 billion colors

Increasing the bit depth results in almost exponentially better color rendition. Now, remember that the old benchmark limit was 1.65 Gbit/s, much more than any home theater enthusiast needed for excellent resolution and performance. After all, at the time, these cutting-edge enthusiasts were only concerned with attaining 1080p at 8-bit color - something that was very new and only available to a handful of elite consumers. A year ago, most of these consumers didn't even know what 1080p was and they almost certainly didn't concern themselves with achieving 12-bit color (let alone 16-bit). Still, 12-bit is nice and will likely result in reduced banding, especially during darker scenes in light-controlled environments.

If you take 1080p resolution at 12-bit color the math comes out to 2.2275 Gbit/s. Fortunately for HDMI Licensing, LLC there are now some chipsets out there finally supporting that resolution in mass quantities. What has transpired is a gradual transition whereby consumer electronics manufacturers have the choice of paying a premium (typically up to $1.50 more per chip) for these chips in order to enable Deep Color support and create a value-added product for consumers and dealers.

So the Cable Drama Continues, With a New Twist

Whereas cable manufacturers were starting to come to grips with the fact that most HDMI cables didn't have the metrics to pass 8-bit 1080p over 10 meters without compensating electronics, they are now faced with a new problem. 1080p at 12-bit. The numbers are crunched but the testing phases are still underway as cable manufacturers send in their products for testing to pass the new requirements. Of course many cable manufacturers don't seek certification - in which case buyer beware. There are also great issues with the certification process, but we'll address those later.

Thanks to v1.3 and Deep Color, distances for passive copper cables have shortened yet again and, in a sense, much has been set back a year until the new effects of this slow transition start to be fully understood. We used to say "Don't put a crappy cable in your wall - it might not pass 1080p." Now we must say "Watch out for 12-bit Deep Color (not to mention any future higher-resolution formats or bitrates) - that same cable might need some electronics to pass it properly." With cables of any length over 3-5 meters it is quickly becoming apparent that active components are playing a major role in signal integrity.

Dealers and custom installers are going to have to bone up on the cable electronics needed to migrate their clients so that they can enjoy the latest technology - and learn what cables can handle this new bandwidth and for how long. Active HDMI cable solutions from respected companies like DVIGear are now certified to work up to 40 meters at 1080p 12-bit color. These active solutions are still the most popular method for long-run installation because fiber solutions are so expensive in comparison.

To put it bluntly, you don't want to get caught putting a cable in your wall (or worse, your client's wall) that won't be compatible with current and future (expected) technologies. Undoing a mistake like that could be very expensive, costing you a lot of time and energy. Many customers are being systematically shortchanged by their installers who are only interested in meeting the needs of current 720p and 1080i requirements. Don't let yourself or your clients be obsoleted too quickly!

HDMI 1.3 Certification - A Real Dilemma

So what's so special about certification? Nothing, if you're running a 3 foot HDMI from the DVD player to an LCD panel. Just about any old cable will do. However, if you are going to install a cable that is in danger of hitting upon the maximum potential of the format and you're putting that cable into a wall or ceiling - you might want to spend a little extra and buy from a company that guarantees and tests its products.

The interesting thing about certification, however, is that HDMI Licensing, LLC doesn't even account for the real-world situation where chip manufacturers don't fully support 3.4 Gbit/s bitrates. No one we know of supports the theoretical maximums. As a result, the real-world bit-rates of 2.2275 for 1080p at 12-bit color needs to be tested using the same criteria as the 1080i cable that only needs to pass 742.5 Mbit/s. That's right, there is currently no certification for 1080p at 12-bit. Are you scared? You should be.

So far, from what we understand, HDMI certification is largely a very fast and loose (not to mention expensive and apparently profitable) program that does nothing to truly ensure any of the manufacturer's production cables meet or exceed any practical current specification. Why do we say this? Simple. The HDMI testing standard has two categories currently:

- Category 1 is for 720p and 1080i cables at 8-bit

color. This is the "chump change" spec that was business as usual

before HDMI 1.3 and, unless the connectors fall off, any Chinese-made cable is

going to hit this for at least 4-5 meters without a hiccup.

- Category 2 is the

balls-to-the-wall 3.4 Gbit/s (non-existent) standard. The only silicone that

exists on the market beyond Category 1 is rated to handle up to 2.25Gbit/s. For

certification you either test level 1 (duh) or level 2 (meaning you must

over-design your product.)

What the HDMI people should have done is inserted a real Category 2 certification for real-world 1080p/60 12-bit color (~2.25Gbit/s) since that bitrate is supported by silicon chipsets. "Category 3" could then take on the theoretical maximums of 3.4Gbit/s for future-ready products such as cables (since no electronics can pass the theoretical maximums at present).

Conclusion

So where does this all lead? To a grand new adventure. Progress is good, but HDMI v1.3 was a bit of an artificial "nudge" in a direction consumers didn't necessarily feel the need to take. Even so, I believe it will all work out on the end and it did allow cable manufacturers (the serious ones) to quickly realize the need for active solutions to ensure signal stability and integrity for longer cable runs. Without the increased bitrates this may have taken longer and more consumers would be installing cables that wouldn't pass the high bitrate signals now present in an ever-increasing amount of consumer electronics. The key to remember is that you cannot install an HDMI cable in your ceiling or wall that is designed to pass Category 1 specs (742.5 Mbit/s) and assume they will be just fine for features such as 1080p and 12-bit Deep Color. Pay attention and ask questions so that you aren't pulling your hair out down the line as you rip open your walls and curse your custom installer for letting you take the cheap way out!

Special thanks to Steven Barlow of DVIGear for technical contributions to this article.