AMD Fuels Nightmares of Intel & Nvidia

2020 may not go down in history as anyone’s favorite year, unless your name happens to be Advanced Micro Devices (AMD). From thread-ripping CPUs to the Big Navi GPU hype-train this year, everything’s coming up AMD. The resurgence of AMD is giving competitors in two unique microprocessor industries reason to worry.

The company’s stock prices continue shattering records after beating Intel to a 7nm process. According to industry analyst Mercury Research, AMD’s share of the x86 market overall recently reached 18.3%, its highest since 2013. AMD’s share of the desktop CPU market began a growth-spurt in 2018 that’s continued for ten consecutive quarters. And on the GPU-side of the business, AMD is set to bring serious competition to Nvidia’s top-shelf graphics cards for the first time in years.

Anders Bylund at Motley Fool says of AMD stock:

"The company is executing like a champion while arch-rival Intel experiences manufacturing issues, and AMD's products are in high demand during the COVID-19 pandemic. AMD's stock has surged 174% higher over the last 52 weeks, including a 77% gain in 2020 alone."

Nvidia Grows While AMD Explodes

But things haven’t always been this good for the chip-maker from Santa Clara California. The year 2020 could mark one of the most dramatic turnarounds in tech-industry history. AMD’s 2020 story could go on to rival the historic turnaround of Apple when Steve Jobs made his heroic return in 1997 to pick up the pieces of his company and go on to create today’s smartphone industry. A turnaround of this magnitude is no small feat for a company that’s been a perennial underdog in two very different chip markets against entrenched industry giants. A combination of focused leadership and brilliant engineering has allowed Team Red (as the AMD-faithful are called) to claw its way back from obscurity, while providing pure nightmare-fuel for competitors. Even the card-carrying members of Team Green (Nvidia) or Team Blue (Intel) have to admit that AMD’s growth has been great for consumers overall, because any seasoned PC-builder will tell you, unfettered market dominance leads to grievous price-tag injustices. By getting back into competitive shape, AMD can once again occupy its niche of offering consumers a solid price/performance alternative.

Personal Note: No, I am not an AMD fanboy. Of all the PCs I've assembled for my own use over the decades, I've only purchased an AMD product once, long ago. It was the excellent 486 DX2 66 processor in the early 90s. My current HTPC/gaming rig happens to contain Intel and Nvidia. But like any bargain-hunter seeking to avoid the top-of-the-line hardware tax, while harboring no silly corporate-loyalties, you'd have to seriously consider AMD parts in your next PC.

AMD: Dark Days

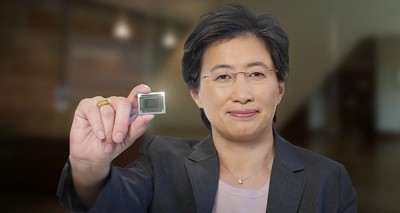

Just five years ago AMD was in serious trouble. The company was drowning in debt as its stock prices sunk to around $2 per-share in late 2014 as AMD was facing bankruptcy. This was the Gong-Show inherited by a new, unproven CEO Dr. Lisa Su. Although it may not have looked like it at the time, filling the vacant CEO position from within was a confident move for AMD. If instead it had on-boarded a chief executive from outside, AMD may have sent a message that it was a sinking ship in full damage control mode. Dr. Su now had the unenviable task of stopping the company from taking on more water.

Dr. Su had been an exec at AMD since 2012, and her electrical engineering PhD from M.I.T. meant she brought insights into the company’s capabilities. When Dr. Su accepted the position as AMD’s first female CEO in 2014, she must have known that AMD had an arsenal of secret weapons that, with a little luck, would see the company through a full turnaround.

AMD in x86 Lore

From its early days, AMD was just another small chip-maker lurking in the shadows of Intel. An IBM policy required that the 8086 and 8088 chips in its computers come from at least two sources in an effort to monopoly-proof supply. AMD had been that second-source manufacturer for Intel in the 1980s, then had the audacity to create its own reverse-engineered versions of Intel’s 386 chips in 1991. We can thank a 1994 California Supreme Court decision in AMD’s favor that helped open the door for smaller x86 competitors like AMD and others, including… Does anyone remember Cyrix? The court’s decision wounded Intel’s veritable monopoly on PC processors and ever since, the company has learned to use lawyers and courts as much as engineering to maintain its dominant position in the x86 processor market. But when it comes to the smaller chip-makers nipping at the heels of Intel, none have been as persistent a pest as AMD.

From its early days, AMD was just another small chip-maker lurking in the shadows of Intel. An IBM policy required that the 8086 and 8088 chips in its computers come from at least two sources in an effort to monopoly-proof supply. AMD had been that second-source manufacturer for Intel in the 1980s, then had the audacity to create its own reverse-engineered versions of Intel’s 386 chips in 1991. We can thank a 1994 California Supreme Court decision in AMD’s favor that helped open the door for smaller x86 competitors like AMD and others, including… Does anyone remember Cyrix? The court’s decision wounded Intel’s veritable monopoly on PC processors and ever since, the company has learned to use lawyers and courts as much as engineering to maintain its dominant position in the x86 processor market. But when it comes to the smaller chip-makers nipping at the heels of Intel, none have been as persistent a pest as AMD.

When AMD purchased Canadian graphics card company ATI back in 2006, it took control of more than just the company’s talent and graphics technology. AMD also borrowed elements from ATI’s branding. It used the name Radeon for its graphics technology division and seized ATI’s branded red mark, abandoning its old green and black logo, thus Team Red was born. The ATI acquisition wasn’t cheap, it cost AMD $5.4 billion, and enabled it to continue the tradition of playing underdog in another competitive chip market, graphics processors (GPUs). Just as it did in the CPU market, AMD’s Radeon graphics cards would intermittently stand as a solid price-to-performance choice for your next gaming rig or HTPC. But its chips would seldom be anyone’s “money-is-no-object” choice in either graphics or CPU. “Team Red'' diehards notwithstanding of course.

A CEO with a Plan

So, what was Dr. Lisa Su’s plan to turn things around at AMD? In an interview with CNN Business Dr. Su describer her strategy:

“The most important thing for us as we thought about what AMD would look like back in 2014 is: What are we good at? And what we’re good at is making high-performance microprocessors and that meant actually saying that we weren’t going to do some things. At the time mobile was a very exciting field, but it wasn’t what we were fundamentally good at so we really focused on our core. I know it sounds simple, but it actually takes a long time to build a sustainable product roadmap.”

Forgoing opportunities in mobile and Internet of Things technologies to focus on high-powered processors was probably more difficult than it sounds. Tech executives are notorious “shiny object chasers”, they love to be part of the next big thing and presenting new directions in exciting new fields may have sounded great to weary investors back in 2014. But focusing on its core competency was no small task, it meant delivering innovations in both technology and pricing for competitive, growing markets like gaming, artificial intelligence, VR and data center computing.

AMD Diversification

When Dr. Lisa Su began working for AMD in 2012, only 10% of company revenue was generated outside the PC market. But by 2015, just one year into Su’s tutelage that number had swelled to 40%. Of course, winning contracts to provide the chip’s inside both Microsoft’s Xbox One and Sony’s PlayStation 4 (both arriving late 2013) was probably a large part of boosting its non-PC revenues. Recently, the company has again earned its spot as CPU and GPU supplier for both Sony and Microsoft’s latest round of game consoles. Diversifying beyond the PC continues for AMD with its Epyc processors designed specifically for data centers, and another slice of a market Intel dominates. It’s estimated that Intel provides as much as 99% of the processors used by the three major cloud services, Amazon Web Services, Microsoft Azure and Google Cloud. But since that estimate was written, both Amazon and Microsoft have begun injecting Epyc processors into their services.

When Dr. Lisa Su began working for AMD in 2012, only 10% of company revenue was generated outside the PC market. But by 2015, just one year into Su’s tutelage that number had swelled to 40%. Of course, winning contracts to provide the chip’s inside both Microsoft’s Xbox One and Sony’s PlayStation 4 (both arriving late 2013) was probably a large part of boosting its non-PC revenues. Recently, the company has again earned its spot as CPU and GPU supplier for both Sony and Microsoft’s latest round of game consoles. Diversifying beyond the PC continues for AMD with its Epyc processors designed specifically for data centers, and another slice of a market Intel dominates. It’s estimated that Intel provides as much as 99% of the processors used by the three major cloud services, Amazon Web Services, Microsoft Azure and Google Cloud. But since that estimate was written, both Amazon and Microsoft have begun injecting Epyc processors into their services.

Microchip Architect “Rock Star” Jim Keller

The seeds of AMD’s future success were already being sown when Dr. Su took over the reigns in 2014. Since 2012, the man that VentureBeat called a “chip architecture rock star”, Jim Keller was leading AMD’s development team on a project to create a new x86-64 core, code-named “Zen”. Keller was a veteran of AMD’s Athlon K7 and K8 development projects and had also worked on the inside of Intel. VentureBeat’s interview with Keller reveals a real character and a grounded family man, at one point he even feigns surprise that he has a page on Wikipedia. But in tech circles he has a reputation as one of the brilliant chip engineers of our time and his role with AMD must have been pivotal to its success.

In interviews, Keller almost seems embarrassed by the “rock star” characterization while giving most of the credit to his teams of “very smart people” at AMD. He seems like the kind of guy whose leadership skill emanates from the loyalty he engenders from his people. Although we’re at least one generation removed from the original Zen architecture his team developed, we’re now only realizing its impact across the CPU industry.

The first generation of CPUs based on AMD’s Zen octa-core microarchitecture would launch in 2017. The 1000-series Ryzen processors had arrived! The Zen architecture would continue to evolve into Zen2, the current generation of critically acclaimed CPUs at prices consistently below comparable Intel varieties, and very soon we'll see Zen3. The continuing evolution of AMD’s Ryzen CPU’s can be credited in no small part to the 7nm process of its foundry, the Taiwan Semiconductor Manufacturing Company (TSMC). Being able to shrink the space between individual semiconductors that small has been huge for incremental improvements in Zen’s architecture, allowing for greater transistor density and efficiency.

Ryzen 3000-series proves AMD is back as a competitive price-to-performance choice compared to Intel’s current 10th Generation chips. And it looks as if the Ryzen hits would continue into its future iterations. Meanwhile, Intel broke the news of delays to its production of 7nm processors, pushing it all the way back to 2022. The CPU giant is now planning to outsource its chip manufacturing to TSMC, a move that would have been unthinkable for the Intel of the past.

The Mind-Boggling Scale of the Nanometer

One millimeter is about the smallest thing I can actually see, even with my glasses. While one million of anything is a figure so large I cannot comprehend. How big a room is needed to fit one million sandwiches? Who knows!

But TSMC's new manufacturing process will mass-produce chips with only 7-nm gaps between each transistor. To give you an idea of how small a scale that is, one-nanometer is a millimeter divided into one MILLION equal parts. Mind = Boggled!

AMD User Growth

The ball is in Intel’s court for its 11th generation of CPUs due to launch any time now. We will see some interesting new processors, but honestly, the best thing about new processor releases are the trickle-down price reductions throughout the lineup. For now, all signs show AMD biting into Intel’s still dominant market-share. One readily available metric shows us which CPUs are popular with the PC gaming market, a tough crowd if there ever was one. Games distributor Steam publishes a monthly report that provides insight into the kinds of hardware and software its users are running and Team Red has shown consistent month-over-month growth since spring. While these aren’t overall sales figures, Steam is the largest games distributor online and if there’s one way the hardcore-gaming crowd is reliable, it’s setting the hardware trends that emanate to the rest of the PC world.

Monthly AMD CPU-Users on Steam

Considering the other 70+% of Steam users are using Intel CPUs gives you an idea of the giant AMD is up against.

Graphics Processors (GPU)

The pattern for AMD’s GPU releases may look suspiciously familiar because it’s another market where AMD struggles against an entrenched rival, this time Nvidia. The long-standing pattern has AMD following Nvidia’s lead in graphics technology breakthroughs in a GPU of its own. Then, after some time has passed, prices are adjusted and AMD updates its drivers, Team Red sometimes manages to rival the performance of Nvidia’s comparable mid-tier range video cards at a slightly lower price.

July of last year (2019) marked a new chapter for AMD with the launch of the first Navi-based video cards. Navi was the codename for its new RDNA graphics-chip architecture that brought AMD into direct competition with Nvidia’s top performing graphics cards. Navi (RDNA) would have been in development around the same time as Zen over at AMD’s Radeon Technologies Group. When AMD bought ATI it inherited a talented engineer named Raja Koduri who would be put in charge of the division responsible for AMD’s discrete GPUs. The Radeon Technologies Group would have been Koduri’s playground when RDNA architecture was in development, but we’ll hear more about Koduri later.

The twin efforts of RDNA and Zen seem to have paid off, mirroring each other’s success in their respective markets. Back in 2014 when Dr. Lisa Su took over at AMD, one can only imagine the high-stakes gambles playing-out behind the scenes as the company invested dwindling resources into two completely unique chip designs. This would make for some dramatic material for Netflix or HBO, give it a little “Halt and Catch Fire” vibe, and I think you’d have a hit.

Radeon Technologies Group

The launch of AMD’s RX 5000-series graphics cards based on the new Navi/RDNA architecture back in 2019 marked a turning point for AMD in the graphics industry. For the first time since its dark days, the company released a graphics card that eclipsed rival Nvidia’s in price-to-performance. AMD had a hit with possibly the most widely recommended graphics card on the Internet. Radeon RX 5700 XT isn’t the most powerful consumer graphics card, but through early 2020 it has been the price/performance contender. According to Tom’s Hardware, the RX 5700 XT lags about 4% in overall performance behind Nvidia’s RTX 2070 Super, for around half the price. The RTX 2070 Super is itself Nvidia’s “why pay more?” card, offering significant value over the company’s own heavy hitters like the RTX 2080 Ti and RTX 2080 Super for a 10-to-15% performance dip.

While all three of these current top performers are capable of 1440p gaming, only the AMD cards ignored a certain graphics rendering technique known as “ray tracing”. Unfortunately, the persistent absence of ray tracing has grown into an open sore for the Radeon Technologies Group. When ray tracing compatibility was announced by Nvidia all the way back in Aug. 2018 with its RTC Turing GPU, many assumed it would only be a matter of time before an AMD driver update would magically turn on ray tracing for some of its upper-echelon cards, but that never happened. Statements from AMD execs over the past year never clarified whether or not they would end the ray tracing envy suffered by the kids who spent a school year’s worth of milk money on a brand new RX 5700 XT. Dr. Lisa Su confirmed ray tracing was in development while David Wang, VP of engineering at Radeon Technologies Group said:

“Utilization of ray tracing games will not proceed unless we can offer ray tracing in all product ranges from low end to high end.”

By now the answer is clear, that the “product range” Wang was talking about was future products. But that future is almost here.

The Next Round in the Graphics Card War - Big Navi vs. Ampere

These are exciting times for gamers and video-creators alike, worldwide we’re getting ready with a bowl of popcorn to watch the battle of next-gen graphics technology between Nvidia’s Ampere and AMD’s Big Navi. Big Navi is the codename for the evolution of its RDNA architecture, RDNA2. And the pre-release battle is on! A year ago AMD returned to form as a cost-effective alternative to Nvidia, but this time around, with ray tracing solved and 1440p performance in its sights, AMD may be gunning for top spot overall.

These are exciting times for gamers and video-creators alike, worldwide we’re getting ready with a bowl of popcorn to watch the battle of next-gen graphics technology between Nvidia’s Ampere and AMD’s Big Navi. Big Navi is the codename for the evolution of its RDNA architecture, RDNA2. And the pre-release battle is on! A year ago AMD returned to form as a cost-effective alternative to Nvidia, but this time around, with ray tracing solved and 1440p performance in its sights, AMD may be gunning for top spot overall.

This year will also see the launch of both Microsoft and Sony’s next-gen game consoles over the Christmas season. Both Xbox Series X and PlayStation 5 will include custom versions of AMD’s Zen2 CPU and RDNA2 GPU, and both will support ray tracing and 1440p resolution.

Graphics Competition from Intel

While everyone is talking about graphics cards from Nvidia and AMD, Intel has been discretely building a discrete GPU of its own. Yes, the company that was caught flat-footed on CPU technology has decided to compete with AMD in another arena. I know what you're thinking. An Intel graphics card...seriously?

Why Intel Xe Graphics Could be a Future Performer

Intel hadn’t played the dedicated GPU game since 1998 when it confirmed plans to build discrete GPUs in June 2018. Based on its notoriously low-performance integrated graphics solutions, few believe Intel will present serious competition to Nvidia or AMD in the enthusiast market. But Intel has made its intentions clear, it is targeting your hardcore gaming rig with its Xe Graphics cards, launching sometime next year. Don’t laugh! Intel has a not-so secret weapon for the upcoming graphics card wars.

It turns out that when Intel was planning its new discrete graphics division it poached key talent from AMD. Two of the individuals that had a hand in AMD’s dramatic turnaround have moved over to Team Blue. Included among the heads Intel hunted are AMD veterans, Raja Koduri and the “Chip Architect Rockstar” himself, Jim Keller. With this level of talent behind Xe Graphics, Intel will be one to watch. Although, we recently learned that Keller left Intel in June, after just two years as the company was ramping up its Xe Graphics division.

But it was a real surprise to see former ATI/AMD lifer, Raja Koduri leave. He’s the man many say was most responsible for building-up its Radeon Technology Group. It was his baby that developed Vega and RDNA graphics architectures. On why he left AMD, Koduri said in a Barron's interview:

"When I took a break from AMD my thinking was, What am I going to do for the next 10 years? What is the problem statement for the world for the next 10 years? Where I landed was, we’re in the middle of a data explosion. The amount of data that is being generated in the world is way more than our ability to analyze, understand, and process,"

When an incredible mind like Koduri contemplates solving problems for the “world”, he means it literally. The way he intends to tackle this particular world problem may allude to one of the unique capabilities of GPU technology itself. Problems associated with our world’s data overload are indeed liable to be solved in GPU development, and may provide clues as to why Intel seems so committed to going deep into the discrete graphics space... Deep Learning.

Deep Learning is an area of Artificial Intelligence (AI) that employs specialized multi-core graphics processors. Because they contain crazy core-counts compared to CPUs, GPUs possess a unique ability to quickly compute multiple threads in parallel, where CPUs emphasize fewer cores operating on fewer separate threads, but at a much higher rate. Just imagine how many simultaneous, but comparatively simple computations are operating as a GPU keeps every pixel in the right place while you’re blasting demonic aliens in a first-person shooter like Doom. This makes GPU technology perfect for the area of AI that mimics learning in the human brain. The brain constantly makes parallel computations as you contemplate what’s for lunch and simultaneously regulate body temperature, breathing, heart-rate and many more involuntary responses to your environment. Deep Learning AI may someday help us make sense of the reams of data coming at us every day on the Internet, while evaluating what’s valuable to the operator’s goals as it works, literally learning and fine tuning itself. It’s definitely a market that will experience growth in coming years and chip companies like Nvidia and AMD are already involved. It’s no wonder Intel wants in.

Pre-Release Hype-Train Competition

The final days before a competitive tech product launch is a bit like the final days of regular season before playoffs in sports. It’s all about positioning! Leaks, rumors and announcements play a part in providing a picture of what to expect. But they can also obscure, while “faking-out” the competition.

In a recent video, tech YouTuber JayzTwoCents talks about the game Nvidia and AMD are engaging as they "psyche each other out" with specs and capabilities prior to the ultimate competition in the retail marketplace.

Jay Says:

“I have no doubt… Nvidia and AMD are trying to fake each other out. When RDNA (the first Navi cards, 2019) launched, we also saw reduced prices, (and) the launch of (additional) new cards (at lower price-points). I think Nvidia has the capability of doing a last-minute switcheroo making (RTX 3080/Ampere) faster than it’s projected to be right now. I think AMD is going to launch its cards after Nvidia, but I suspect Nvidia might come up with some magic driver that gives us 5-to-10% performance increases across the board, because I think they’re going to artificially limit the performance of their cards so they can make that move later… and that makes them look like it’s all about the customer, they’re all about the performance and value.

“I have no doubt… Nvidia and AMD are trying to fake each other out. When RDNA (the first Navi cards, 2019) launched, we also saw reduced prices, (and) the launch of (additional) new cards (at lower price-points). I think Nvidia has the capability of doing a last-minute switcheroo making (RTX 3080/Ampere) faster than it’s projected to be right now. I think AMD is going to launch its cards after Nvidia, but I suspect Nvidia might come up with some magic driver that gives us 5-to-10% performance increases across the board, because I think they’re going to artificially limit the performance of their cards so they can make that move later… and that makes them look like it’s all about the customer, they’re all about the performance and value.

So, Nvidia is going to launch its cards, then AMD cards are going to come out and then Nvidia is going to flip a digital switch in these cards and make everyone happy. I suspect these cards are going to be out prior to November. Rumors are saying November, but I think September and October. I think Nvidia is going to launch their cards first and AMD is going to launch second.” - JayzTwoCents

The release date for Nvidia’s 3000 (aka. Ampere) series may be as early as August 31. If Jayz’ two-cents are correct, it will be soon followed up by AMD’s first Big Navi-based card.

Regardless of whose hardware you run in your PC, competition from Big Navi is good for the industry as a whole. At the very least it keeps Nvidia cost-competitive. Few have suffered price gouging like PC-builders buying graphics cards recently. Between the crypto-mining boom and Nvidia’s lack of competition during AMD’s dark days, high-performing graphics card prices had been inflated.

In the end, I don’t think anybody really expects AMD’s Big Navi to blow the doors off Nvidia’s Ampere. What will probably happen is AMD will once again offer a great price-to-performance alternative. The difference between Nvidia and Intel as AMD-competitors, is that GPU technology is Nvidia’s exclusive game. Being number one in consumer GPU-tech is what keeps Nvidia up at night... and of course the nightmares from AMD.

However, the GPU competition main event is still to come! In coming years Radeon graphics will only further refine as new players like Intel and rumor has it, even Huawei gets into the discrete GPU business. With growing technologies like Deep Learning AI employing GPU technology, it’s not just a cottage industry exclusive to gaming enthusiasts anymore. Who knows, maybe someday with advanced GPUs powering AI, maybe the next big breakthrough in GPU technology will be developed by the GPU itself.